Search Engine Optimisation

NOTE: Hosting my website on the itstud.hiof domain resulted in my website (path) being inaccessible to crawlers and search results. To remedy this I hosted my site on github for the purposes of generating a sitemap and viewing search engine results. My sitemap and several URLs are, therefore, relate to the github domain.

Sitemap and Crawlers

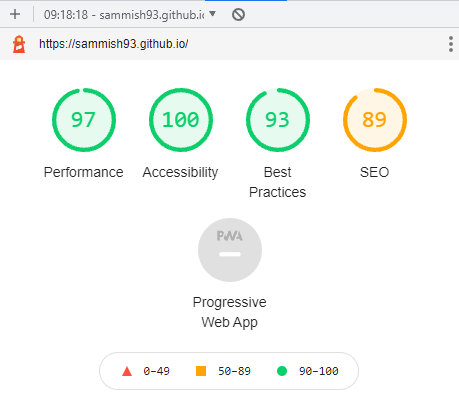

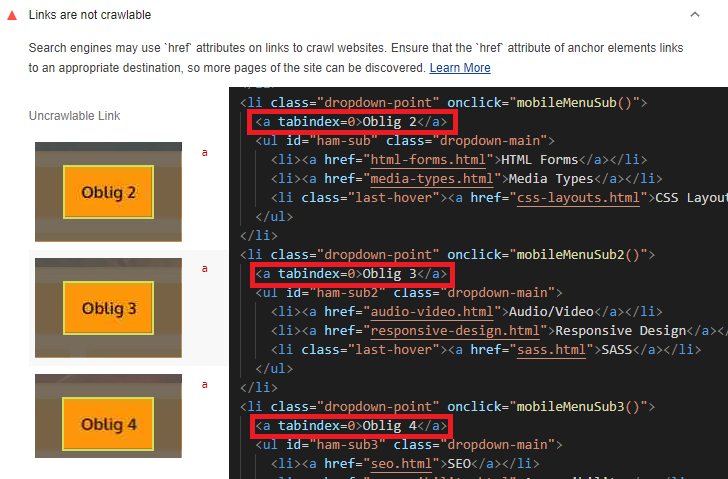

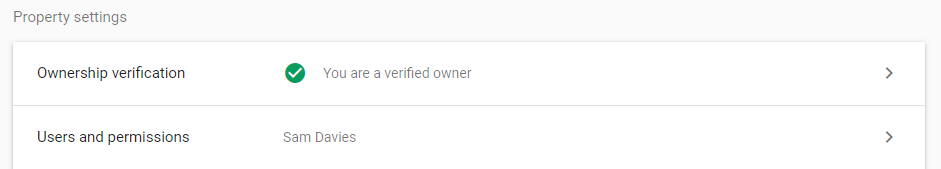

View the sitemap of this websiteFirst things first, I set out to index my site using Google's Search Command. Since my website is completely new and there aren't any other websites linking to my site, I made use of the Google search console. Firstly, I registered myself as owner of the URL. I then proceeded to instruct Google search console to index my site. I supplied google with a sitemap generated via xml-sitemaps.com, and crawlers were then instructed to index my site using the sitemap I provided. If my website hosted on the itstud.hiof domain was accessible to crawlers, I would create a robots.txt file restricting access to the 'Oblig 2', and 'Oblig 3' versions of my site, as they are only hosted for archive and reference purposes, and I wouldn't want them to be a landing page for a visitor as it would result in confusion, and they aren't optimised.

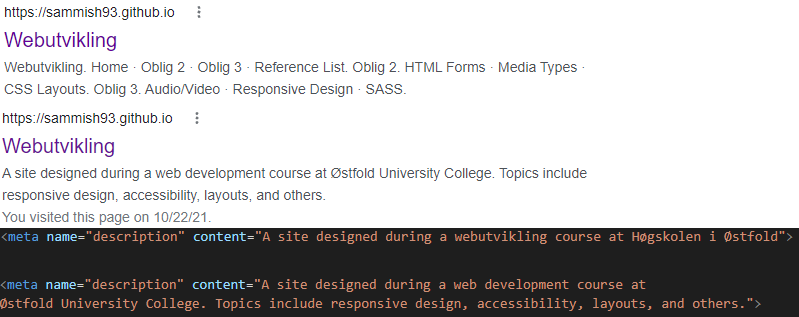

Metadata

To increase hit rate and include my site on relevant searches I added additional metadata to supply search engines with more information they can make use of. By making use of the <meta> tag in the <head> of the HTML code, I can supply additional information about the website without changing the appearance or structure. Using the properties name and content I can create properties such as 'keywords', 'description', and 'author' with values. This information can then be used by search engines to generate a more accurate search result complete with a summary of the site. The sitemap can be used to also display additional subpages (this page is a subpage of index.html).

Microdata and Structured Data

Structured data such as microdata and JSON-LD allow search engines access to additional information about a web page, and subsequently process this data for use in search results. If appearing on relevant results is the goal, then the addition of structured data to a website will help immensely. One such example is a local plumbing business. With the addition of structured data, search engines can be supplied with crucial information such as geographic location. A local plumbing business may not need to appear on the front page of a generic search such as 'plumbing', but will absolutely appear on the front page of a more specific search such as 'plumbing in <location>'. Appearing on search results to a wide variety of people has little meaning to the local plumbing business if visitors to the web site don't contact and order a service.

Read more about microdataOther

Overall, I interpreted the guidelines regarding SEO as boiling down to the following points:

- Optimise text, especially links and buttons, to be more specific

- Ensure that the website is easy to navigate, with a hierarchical sitemap often being a suitable choice

- Follow standard practices outlined by the W3

- Incorporate semantic markup whenever possible

- Ensure that the site is responsive, and works with both mobile devices and others

- Add accessibility to the site wherever needed. Examples include tab navigation, and additional ARIA attributes

- Ensure that the site loads fast, avoiding such things as large video and image files if unnecessary